‘I feel tremendous guilt.’

Those words belong to former VP of User Growth at Facebook, Chamath Palihapitiy. He was responding to a question about exploiting consumer behaviour from Stanford students. He went on to say:

‘The short-term, dopamine-driven feedback loops that we have created are destroying how society works.’

In fact, in a recent investigation titled The Facebook Files, the Wall Street Journal reported that Facebook carried out its own internal investigation into the impact of its products and, despite damning evidence of real harm caused to users, including Instagram being directly linked to suicidal ideation among teenagers, carried on with business as normal.

The WSJ's "Facebook Files" is the biggest scoop in the company's history. Internal documents prove:

— Josh Constine - SignalFire (@JoshConstine) September 16, 2021

-Facebook knew its algorithm incentivized outrage

-Instagram knew it hurt teen girls

-Facebook has been shielding VIPs from moderation

Here are the shocking revelations... 🧵

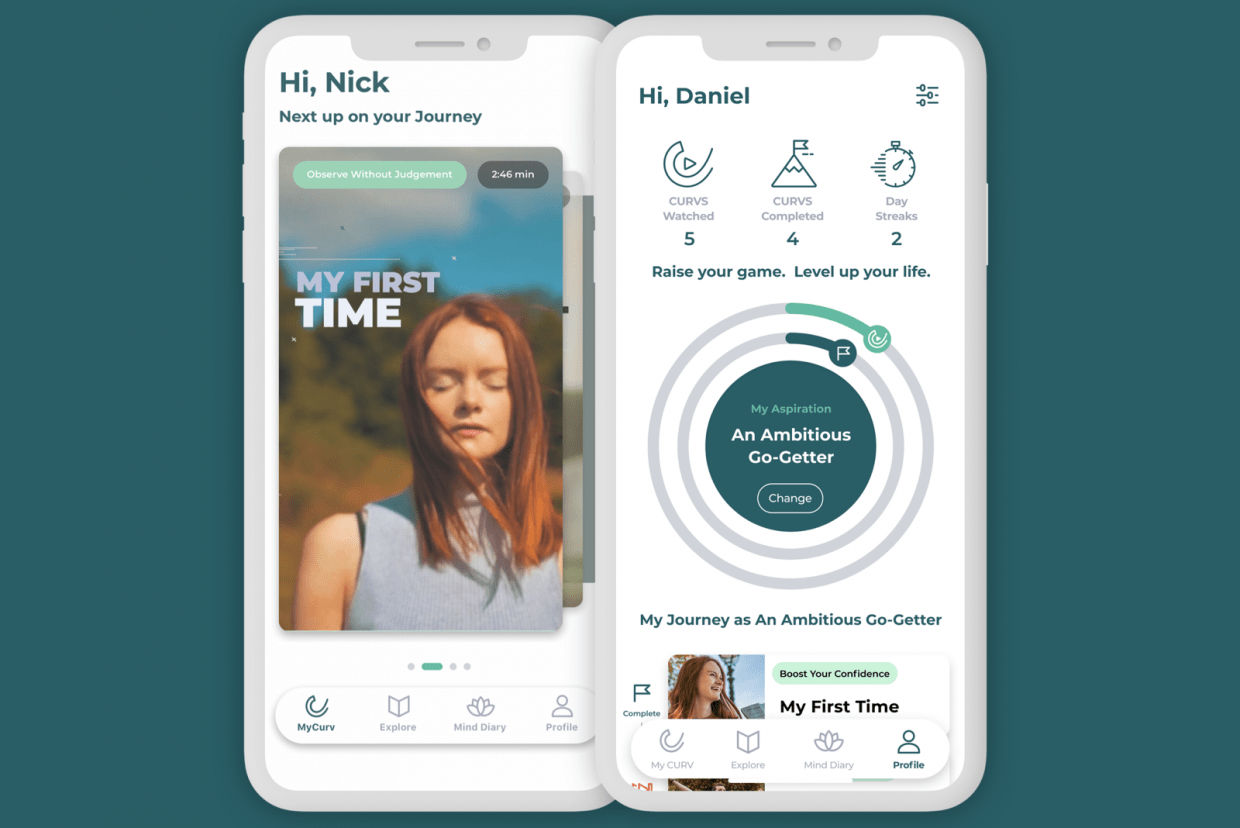

Advocates for humane tech have long been saying that we need urgently to redesign how we approach building apps and online services, especially those targeted at children. And now, data protection laws are catching up with those very tech services. Not that you’d have heard, if you weren’t paying close attention.

What's The Children's Code?

The Children’s Code (formally the Age Appropriate Design Code) has just come into effect after a year-long transition period (September 2021). It’s a piece of UK legislation first submitted as a conciliatory annex to the Data Protection Act, designed to appease the chair of a child-focused charity.

So what?, you might reasonably think. Your project is nothing to do with the UK. Doesn’t matter: if minors in the UK are likely to access your product, with some exceptions, the Code applies.

So far all the major tech platforms whose users *might* be children (hello Facebook, TikTok, YouTube, Instagram), have announced important new safety protections aimed at protecting the privacy rights and mental health of minors.

What do you have to do?

We’re hoping this is a sign of things to come: that by default, people who create products and services consumed by children will have to build watertight privacy and safeguarding policies into their digital platforms. So, what kind of requirements will you need to meet under the new Code?

The code is a set of 15 flexible standards – they do not ban or specifically prescribe – that provides built-in protection to allow children to explore, learn and play online by ensuring that the best interests of the child are the primary consideration when designing and developing online services.

Settings must be “high privacy” by default (unless there’s a compelling reason not to); only the minimum amount of personal data should be collected and retained; children’s data should not usually be shared; geolocation services should be switched off by default. Nudge techniques should not be used to encourage children to provide unnecessary personal data, weaken or turn off their privacy settings. The code also addresses issues of parental control and profiling.

You can review the full contents of the Code here. One aspect which is useful for anyone designing humane tech, whether it’s for children or adults, is the guideline on nudging techniques. A nudge technique is something that is designed into an interface to lead the user along the designer’s preferred path by exploiting common biases inherent in language, colour, placement and perceived social norms. If you’re designing an app used by children, the Code is an invitation to take a good look at whether your preferred path, and the path that lies in the best interest of the user, are one and the same.

You can review the full contents of the Code here. One aspect which is useful for anyone designing humane tech, whether it’s for children or adults, is the guideline on nudging techniques. A nudge technique is something that is designed into an interface to lead the user along the designer’s preferred path by exploiting common biases inherent in language, colour, placement and perceived social norms. If you’re designing an app used by children, the Code is an invitation to take a good look at whether your preferred path, and the path that lies in the best interest of the user, are one and the same.

We’ll dive into more depth on nudging and behaviour change here on the blog in the coming weeks, so subscribe if you want to get the freshest articles delivered to your inbox.

-